- Neural Pulse

- Posts

- Google Releases Gemini 2.5 Deep Think

Google Releases Gemini 2.5 Deep Think

Google has unveiled Gemini 2.5 Deep Think, a premium reasoning mode in its flagship Gemini 2.5 Pro that...

Hey there 👋

We hope you're excited to discover what's new and trending in AI, ML, and data science this week.

Here is your 5-minute pulse...

But first, a quick message from our partner 👇

An AI scheduling assistant that lives up to the hype.

Skej is an AI scheduling assistant that works just like a human. You can CC Skej on any email, and watch it book all your meetings. It also handles scheduling, rescheduling, and event reminders.

Imagine life with a 24/7 assistant who responds so naturally, you’ll forget it’s AI.

Smart Scheduling

Skej handles time zones and can scan booking linksCustomizable

Create assistants with their own names and personalities.Flexible

Connect to multiple calendars and email addresses.Works Everywhere

Write to Skej on email, text, WhatsApp, and Slack.

Whether you’re scheduling a quick team call or coordinating a sales pitch across the globe, Skej gets it done fast and effortlessly. You’ll never want to schedule a meeting yourself, ever again.

The best part? You can try Skej for free right now.

print("News & Trends")Google Releases Gemini 2.5 Deep Think in Gemini App (5 min. read)

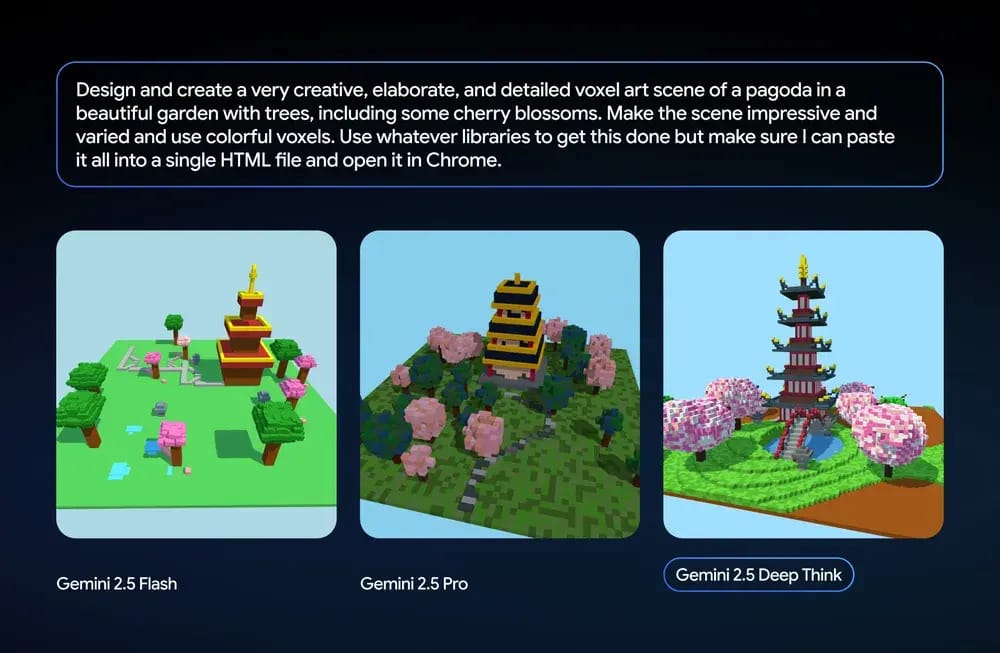

Image source: Google

Google has unveiled Gemini 2.5 Deep Think, a premium reasoning mode in its flagship Gemini 2.5 Pro that spins up multiple AI agents to brainstorm and iterate in parallel, then converges on the strongest solution. It delivers state‑of‑the‑art performance across math, science and coding benchmarks, including bronze‑level results on the 2025 IMO, and is optimized for faster everyday use. The feature is now exclusive to Google AI Ultra subscribers via the Gemini app with controlled access via prompt toggles.

Image source: TechRadar

Thousands of ChatGPT conversations shared via OpenAI’s “Make link public” feature were inadvertently indexed by Google and archived by the Wayback Machine. The issue stemmed from an opt-in checkbox labeled “make this link discoverable,” which many users misunderstood. As a result, sensitive chats became publicly accessible. OpenAI has since disabled the feature, is working with search engines to de-index the content, and urges users to delete old links if privacy is a concern.

ManusAI Introduces Wide Research: 100 Agents at Work (3 min read)

Image source: ManusAI

Manus AI’s new Wide Research feature spins up over 100 general‑purpose agents to tackle large scale tasks in parallel, massively accelerating research and creative workflows. Each agent runs as an independent Manus instance inside its own virtual machine, coordinating via a system‑level protocol to merge insights. The result: supercharged speed, richer diversity, and flexible task handling across domains. Wide Research is now live for Pro users with gradual rollout planned, though resource demands and benchmark data remain limited.

OpenAI Strikes Partnership to Bring Stargate to Europe (2 min. read)

Image source: WSJ

OpenAI has teamed with Nscale and Aker to launch Stargate Norway, its first European AI gigafactory, based in Narvik, Norway under the “OpenAI for Countries” initiative. The $1 billion project will deploy around 100,000 Nvidia GPUs by end‑2026 within a renewable-powered 230 MW facility versus expansion to 520 MW. It leverages Narvik’s hydropower, cool climate and liquid cooling to boost sustainable, sovereign AI infrastructure and power public and private sector compute needs across Northern Europe.

print("Applications & Insights")2025 Mid-Year LLM Market Update: Foundation Model Landscape + Economics (5 min. read)

Menlo Ventures’ mid-2025 LLM Market Update highlights a sharp rise in enterprise demand, with API spend more than doubling in six months and inference now outpacing training. The report shows closed-source models dominate usage, led by Anthropic, OpenAI, and Google. Code generation stands out as the first major enterprise use case, signaling that LLM adoption is shifting from experimentation to real business impact.

Implementing MCP Servers in Python: An AI Shopping Assistant with Gradio

Hugging Face’s blog introduces how Gradio now supports the Model Context Protocol enabling LLMs to tap into external tools hosted on Spaces, like image editors or virtual try‑on models. With minimal Python code and docstrings as APIs, developers can spin up MCP servers that stream real‑time progress, handle file uploads, and auto‑translate OpenAPI specs into callable tools. This infrastructure turns Gradio-powered Spaces into an MCP app store, letting large language models gain dynamic tool-based capabilities instantly.

Implementing Qwen3 from Scratch (with Notebook)

Sebastian Raschka’s new Jupyter notebook in chapter 5 of his LLMs‑from‑Scratch series walks you through implementing Qwen3 inference in PyTorch from the ground up. It provides a lean yet runnable Qwen3‑0.6B model with downloadable official weights, clear tokenizer setup, and basic generation loops geared for educational use. The tutorial emphasizes readability over optimization while still supporting GPU, compilation and reasoning‑mode switching for hands‑on learning.

Agents vs Workflows: Why Not Both? (Video)

Sam Bhagwat, co-founder of Mastra.ai, presents his take on the agents versus workflows debate in AI. He argues against the idea of a single "right way" to build, and criticizes complex, graph-based APIs. Defining agents as turn-based games and workflows as rules engines, he proposes that the most effective approach is to compose them, using agents as steps in a workflow or vice versa to achieve greater reliability and performance. The presentation emphasizes that these two approaches are not mutually exclusive.

print("Tools & Resources")TRENDING MODELS

Text Generation

GLM‑4.5

⇧ 11,303 Downloads

This foundation model blends thinking and non‑thinking modes to power agentic intelligence, reasoning, and coding. With 355B parameters (32B active) it ranks top benchmarks in logical and agent tasks, and supports hybrid reasoning and tool use.

Text Generation

GLM‑4.5‑Air

⇧ 753 Downloads

A compact variant of GLM‑4.5 with 106B total (12B active) offering reasoning, coding, and agent tool use in a smaller footprint. It supports thinking mode but is optimized for efficiency and quantized deployment.

Text Generation

Qwen3-30B-A3B-Instruct-2507

⇧ 70K Downloads

A 30B‑parameter mixture‑of‑experts LLM with native 256K token context (extendable), supporting both general instruction‑following and a high‑capacity reasoning mode. Built for coding, math, logic, and multilingual tasks with seamless mode switching.

Text Generation

Qwen3-Coder-30B-A3B-Instruct

⇧ 60K Downloads

A variant of Qwen3 focused on coding and agentic tasks, optimized for long‑context multi‑step programming workflows. Excels in agentic browser use and repository‑scale understanding.

Text Generation

GLM‑4.5-Air‑AWQ‑FP16Mix

⇧ 753 Downloads

Quantized FG16 mixed variant for efficient deployment on constrained hardware while retaining agentic reasoning and coding performance in smaller footprint.

TRENDING AI TOOLS

🚀 Learnify: Turn any content into an AI-generated video course in minutes.

🧠 NEO: An autonomous AI agent that builds and deploys ML workflows for you.

👁️ Command A Vision: A multimodal AI that understands images and text for enterprise use.

🤖 Jenova: An AI agent that runs tools through the Model Context Protocol.

That’s it for today!

Before you go we’d love to know what you thought of today's newsletter to help us improve the pulse experience for you.

What did you think of today's pulse?Your feedback helps me create better emails for you! |

See you soon,

Andres