- Neural Pulse

- Posts

- Gemini with Deep Think Officially Takes Gold

Gemini with Deep Think Officially Takes Gold

DeepMind’s Gemini Deep Think has hit gold at the IMO, flawlessly solving five of six ultra‐hard math problems in natural language

Hey there 👋

We hope you're excited to discover what's new and trending in AI, ML, and data science this week.

Here is your 5-minute pulse...

But first, a quick message from our partner 👇

Find out why 1M+ professionals read Superhuman AI daily.

In 2 years you will be working for AI

Or an AI will be working for you

Here's how you can future-proof yourself:

Join the Superhuman AI newsletter – read by 1M+ people at top companies

Master AI tools, tutorials, and news in just 3 minutes a day

Become 10X more productive using AI

Join 1,000,000+ pros at companies like Google, Meta, and Amazon that are using AI to get ahead.

print("News & Trends")

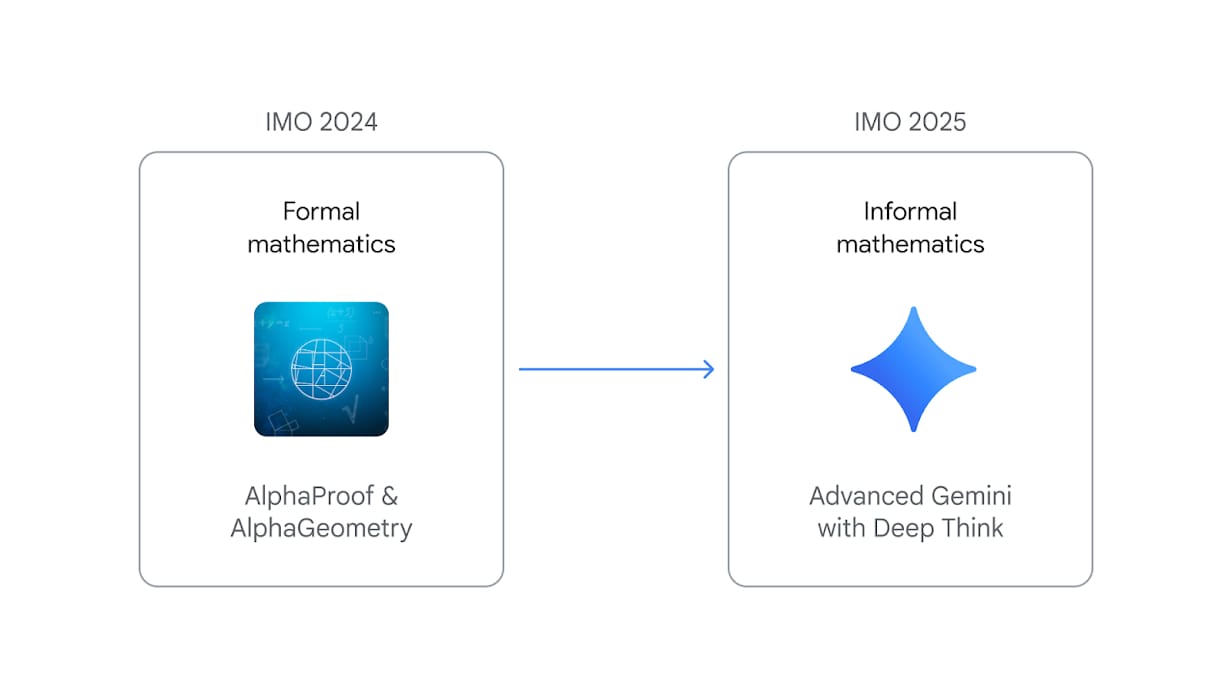

Image source: DeepMind

DeepMind’s Gemini Deep Think has hit gold at the IMO, flawlessly solving five of six ultra‑hard math problems in natural language within the 4.5‑hour contest window, earning 35/42 points and official gold‑medal status. Unlike last year’s silver‑level result, this version needed no translation into formal proof languages and ran end‑to‑end using new “parallel thinking” and reinforcement‑learned reasoning techniques. It marks a major leap toward flexible AI math reasoning in real time.

Brain-Inspired Open-Source Hierarchical Reasoning Model (5 min. read)

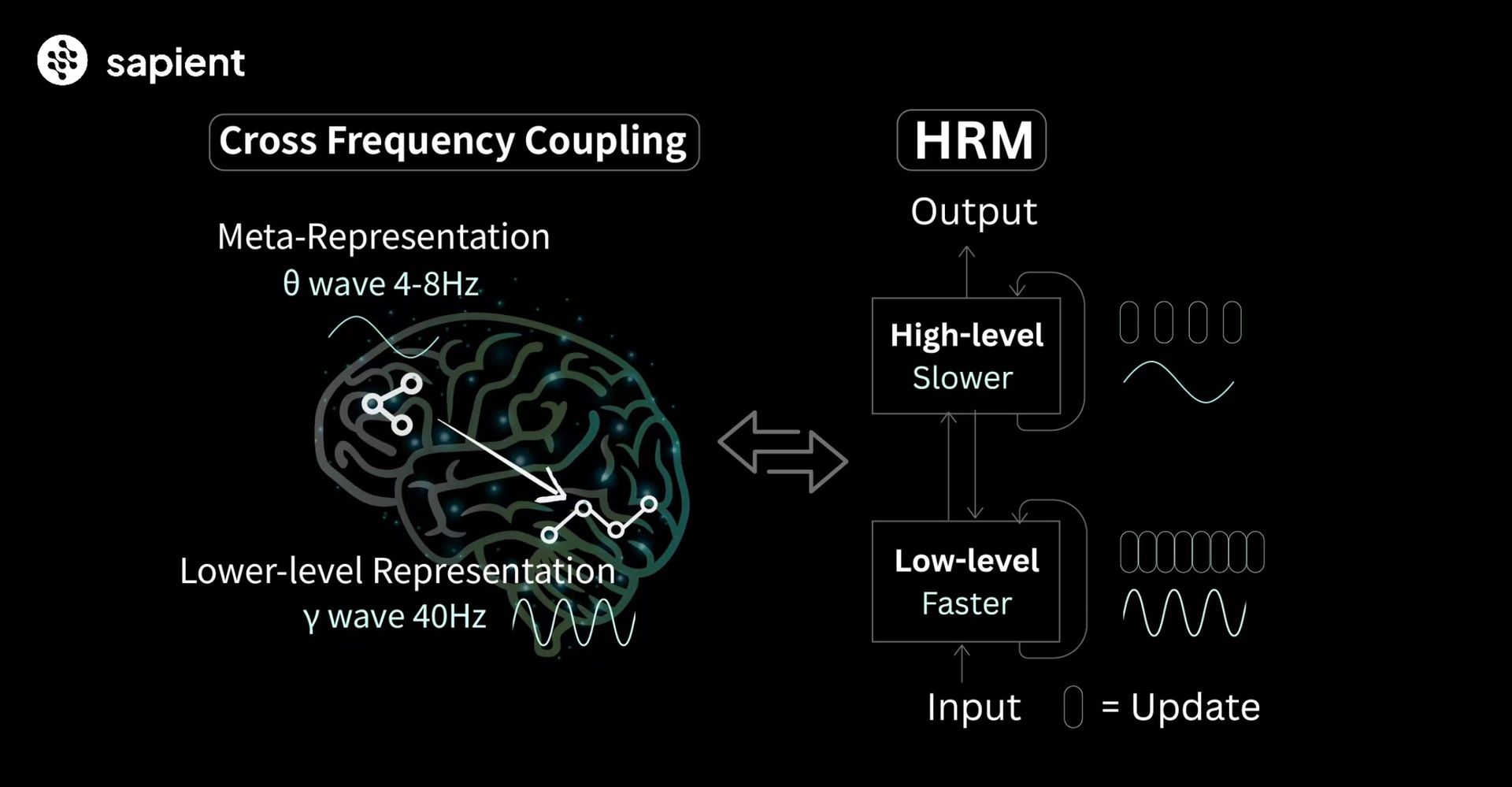

Image source: Sapient

Sapient Intelligence has open‑sourced HRM, a brain‑inspired Hierarchical Reasoning Model that uses just 27 M parameters and 1,000 examples—no pre‑training—to solve complex logic puzzles, Sudoku‑Extreme, mazes and even ARC‑AGI at ~5% (well ahead of much larger models). It pairs a slow abstract “CEO” module with a fast “worker” module for real‑time hierarchical processing, yielding near‑perfect accuracy on mazes and huge data‑efficiency. This marks a milestone toward lean, on‑edge AGI-like reasoning.

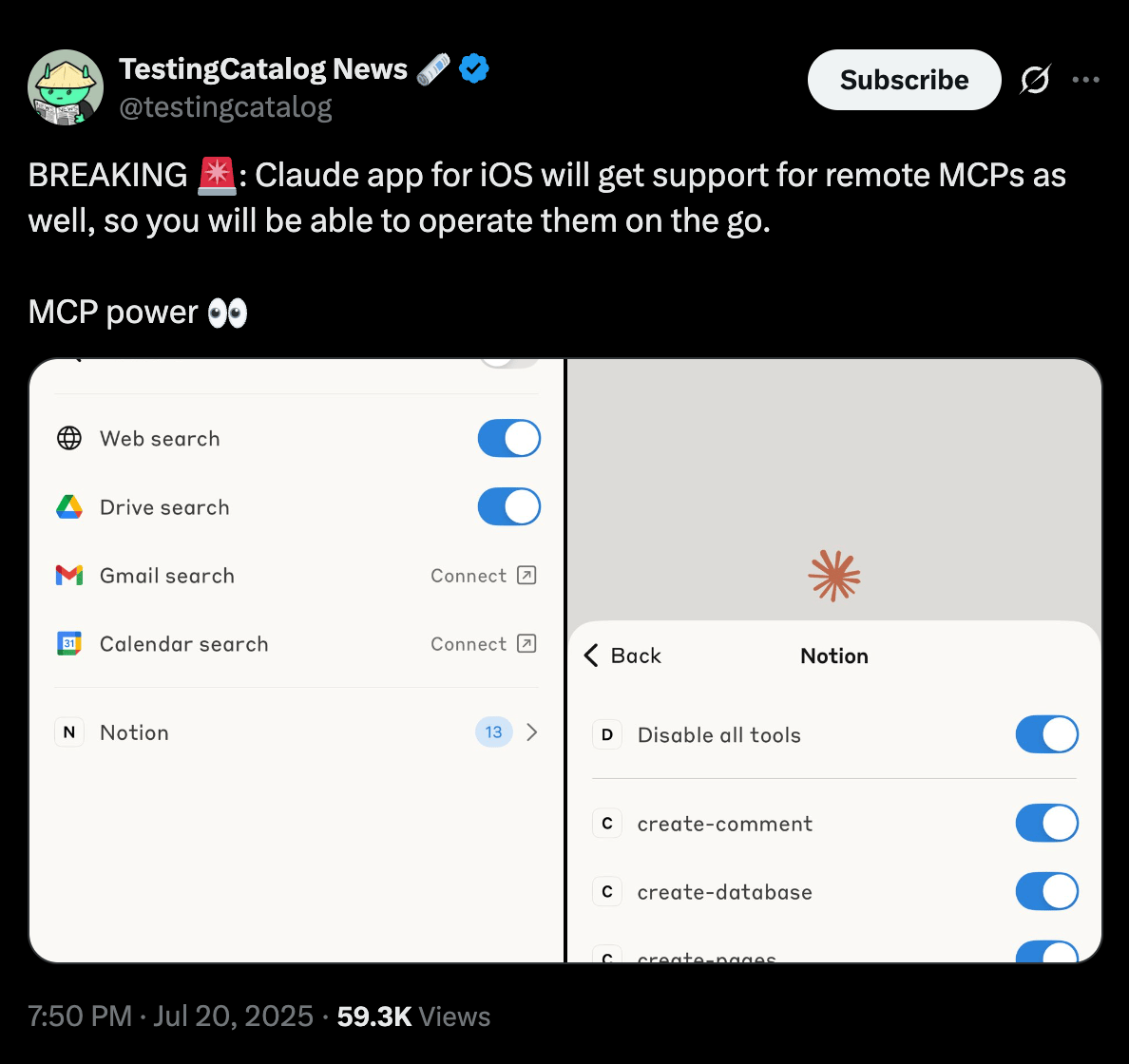

Image source: TestingCatalog

Anthropic is rolling out a major mobile upgrade for Claude’s iOS app, adding long‑awaited memory recall so it can reference past chats, plus the Artifacts Gallery for managing saved outputs, and remote MCP support to tap into external tools like Notion away from desktop. These internal tests hint at a future where mobile Claude mirrors the full context‑aware, multi‑tool prowess of its web counterpart, though no release date has been shared.

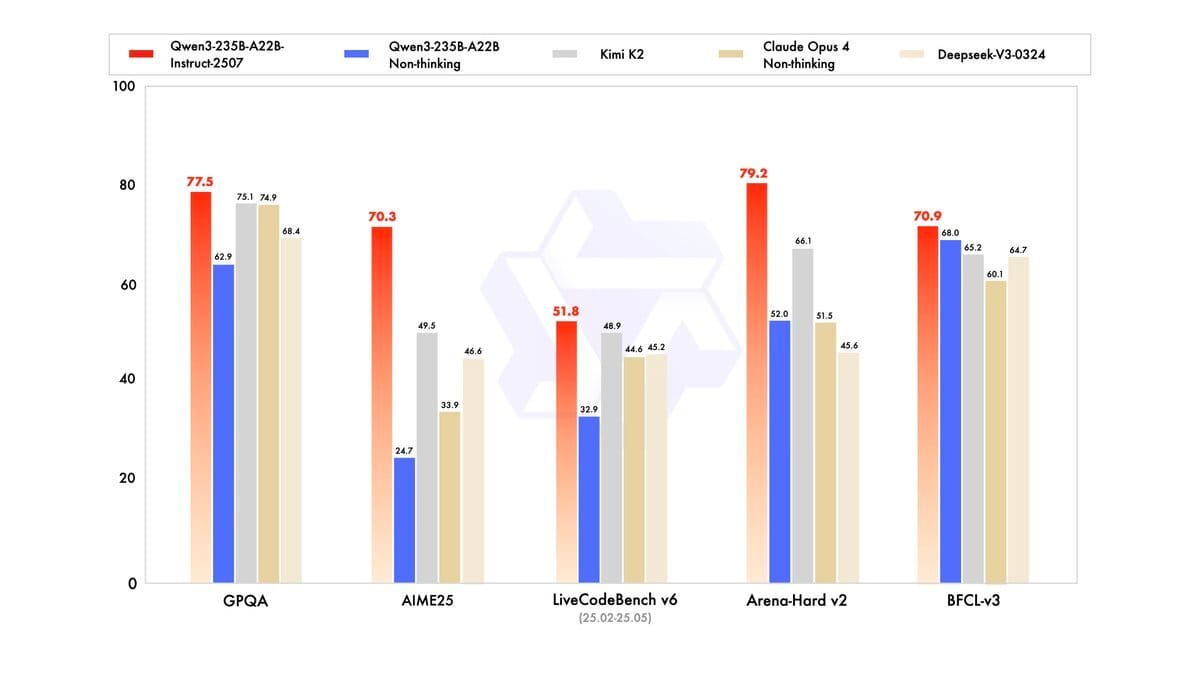

Qwen3 Just Shook Up the Open-Source LLM Race (1 min. read)

Image source: Qwen

Alibaba just dropped a fully open-source, non-thinking version of Qwen3 that outperforms Kimi K2 and even challenges Claude Opus 4 and GPT-4o in benchmarks. It activates 22B of 235B parameters, runs with a 256K context window, and is now the free default on Qwen Chat. By splitting off its reasoning model and leaning into openness, Alibaba is making a bold play for AI leadership—despite Western chip limits.

print("Applications & Insights")Don't bother parsing: Just use images for RAG (12 min. read)

Morphik challenges traditional RAG pipelines by treating entire document pages as images instead of extracting text and structure, preserving tables, charts and diagrams intact. They combine ColPali vision embeddings and MUVERA indexing to deliver lightning-fast (~30 ms) multimodal retrieval with over 95% accuracy on finance docs compared to ~67–72% for text-only systems. This vision-first approach dramatically simplifies document workflows and boosts retrieval fidelity.

How we built an MCP Agent from scratch (8 min. read)

Tired of bloated agent stacks, Miguel and Alex built the Kubrick Agent from scratch using just Groq API calls, a Pixeltable memory layer, and a lightweight MCP client. It routes tasks, fetches tools remotely, and stores conversations with full traceability. No frameworks, no fluff, just clean modular logic that hooks into a real MCP server and actually works. It's a hands-on blueprint for anyone serious about building agents that don’t rely on shortcuts.

Hugging Face Audio Course (Course)

Hugging Face’s new Audio Course introduces how transformers excel in audio tasks like speech recognition, classification, and text-to-speech with real-time demos. It guides deep-learning-savvy engineers through preprocessing, architecting audio-specific transformer pipelines, and training models—no audio background needed. With pre-trained models, hands-on exercises, quizzes, and community support, you’ll build from genre classifiers to ASR and TTS systems. By the end you’ll understand audio data nuances and have the practical toolkit to deploy powerful audio transformers.

Reinforcement Learning, Kernels, Reasoning, Quantization & Agents by Daniel Han (Video)

Daniel Han breaks down how reinforcement learning is being reimagined through concepts like reward quantization, kernelized policies, and relational reasoning. He challenges the standard RL pipeline, arguing that many breakthroughs come not from new algorithms but from better data, smarter interfaces, and modular agent design. The talk connects RL with modern agent architectures and hints at a future where reasoning, memory, and low-bit optimization unlock real-world generalization.

print("Tools & Resources")TRENDING MODELS

Text Generation

moonshotai/Kimi-K2-Instruct

⇧ 195k Downloads

Kimi K2 Instruct is a 1T-parameter Mixture-of-Experts model activating 32B parameters, built for agentic tasks like coding, tool use, and reasoning with a massive 128K token context window. Benchmarks show top-tier performance on coding and reasoning, rivaling GPT-4.

Text Generation

Qwen/Qwen3-235B-A22B-Instruct-2507

⇧ 1.32k Downloads

This non-thinking variant of Qwen3 activates 22B of 235B parameters and offers a massive 256K context window, delivering strong instruct performance in an open-source package. Benchmarks show it outperforms its predecessor and rivals closed-source leaderboards.

Text Generation

Qwen/Qwen3-Coder-480B-A35B-Instruct

Qwen3 Coder is a 480B-parameter instruct model specialized in code generation, designed to handle large-context tasks with high precision and open-source accessibility. It builds on Qwen’s architecture with enhanced developer tooling.

Audio-Text-to-Text

mistralai/Voxtral-Mini-3B-2507

⇧ 46.4k Downloads

Voxtral Mini is a 4.7B multimodal model tuned for edge compute, delivering speech transcription, translation, summarization, and function calling with up to 40-minute context lengths. It outperforms Whisper-large in accuracy and cost efficiency.

Audio-Text-to-Text

mistralai/Voxtral-Small-24B-2507

⇧ 1.9k Downloads

Voxtral Small scales the Mini model to 24B parameters for production-grade audio-text understanding, including ASR, summarization, and multilingual support, matching GPT-4o-mini and Gemini-Flash performance.

TRENDING AI TOOLS

🧑💻 Gemini Code Assist: AI pair programmer that suggests multi-file code changes and understands your entire codebase.

🧩 Composite: Local browser‑based AI agent that automates clicks, typing, and navigation on any website without setup or API integrations.

🎭 Act-two: Runway’s motion capture AI model, now available via API

📚 Anara: AI research assistant that helps you read, organize, and write scientific content.

That’s it for today!

Before you go we’d love to know what you thought of today's newsletter to help us improve the pulse experience for you.

What did you think of today's pulse?Your feedback helps me create better emails for you! |

See you soon,

Andres